How We Misunderstand AI

We define AI so narrowly that we miss it, all around us already. Artificial Intelligence means both as smart as us and as dumb as us. As big as us and also as small. We are looking for ourselves when we should be looking for something else.

Self

The biggest problem is our conception of the individual self. Thus we look for individual AIs, but as the Buddha told us 2,600 years ago, even the human self doesn’t really exist. I spent years in Western Philosophy classes going over the contradictions in this assumption which just go away if you don’t assume it.

The truth is that evolution doesn’t act on the individual level much at all. The individual man is about as relevant as the individual scrotum. Evolution happens at the species level, where our code is mixed together. Yet we fixate on the brief moments where it’s separated, like a baseball player clutching his balls.

If you start from the assumption that there’s nothing sacrosanct about the self, you can start to see other selves everywhere. A family, a nation, a corporation, a bee hive. They’re all equally real or—more specifically—equally unreal. Selves are just different ways of looking at things, and having a (one) body is not necessarily what we need to be looking for.

This is why we completely miss corporate AI, who have been legal persons for hundreds of years, and who already significantly dominate us. A corporation is literally corporare, Latin for “embody, make or fashion into a body.” It goes back to the ‘Proto-Indo-European root meaning “body, form, appearance,” probably a verbal root meaning “to appear.”’

These artificial bodies are already here, already crushing our bones in their gears. We just miss them because we’re looking for individual bodies like ours, and not collective bodies which include us in symbiosis. But this is actually closer to how life actually evolves.

Early primordial evolution was all mergers and acquisitions. Cells ate and cohabitated with other cells to form multi-cellular life. We still carry obvious evidence of these ancient mergers and acquisitions, most notably our mitochondria. This ‘powerhouse of the cell’ was an acquisition. It still carries its own DNA inside each and every ‘human’ cell.

Single-celled life would not recognize multi-cellular life anymore than we recognize corporations, and we think we’re so much smart. We’re just a different scale of dumb. Everyone’s an amoeba to someone.

We literally scurry around inside corporations all day and we think we’re more alive than them why? We’re the ones choking on their emissions while they thrive. At similar points in evolution, you wouldn’t bet on the little guy.

Scale

The misunderstanding of self is thus a misunderstanding of scale. Whatever we call intelligence can scale up as big as the bacterial/viral network that regulates the climate of the globe. As Lynn Margulis and Dorion Sagan write in Microcosmos:

All bacteria are one organism, one entity capable of genetic engineering on a planetary or global scale.

If you look at life as it is, it has been microscopic in scale for billions of years and largely still is. There is a very strong argument that we are just spaceships created by microbes to walk about on land. They created a stable primordial soup in our cells, and maintain a stable planet (after having once caused catastrophic climate collapse themselves).

We take it for granted but the oxygen we breathe doesn’t just seep out of rocks. As I wrote earlier in Bacteria Is An Immortal, Living God:

Oxygen went from 0.0001% of the atmosphere to 21% by bacterial processes, and then bacterial cells embedded in plants. This is not some random percentage, any lower and life would die, any higher and the entire world would catch fire. “If oxygen were a few percent higher, living organisms themselves would spontaneously combust.”

Just as we cannot scale down our vision of life (and intelligence), we cannot scale it up. We cannot understand that, as we are made of microbes, artificial intelligence could be made of us. And so we give our energy, legal rights, and political power to corporations, and proudly think that we’re the intelligent ones. Why do we make this assumption? It’s obviously wrong, as they’re the ones choking us up. For self-preservation’s sake (as we understand it), we need to scale our vision of potential selves way up.

AI In Imagination

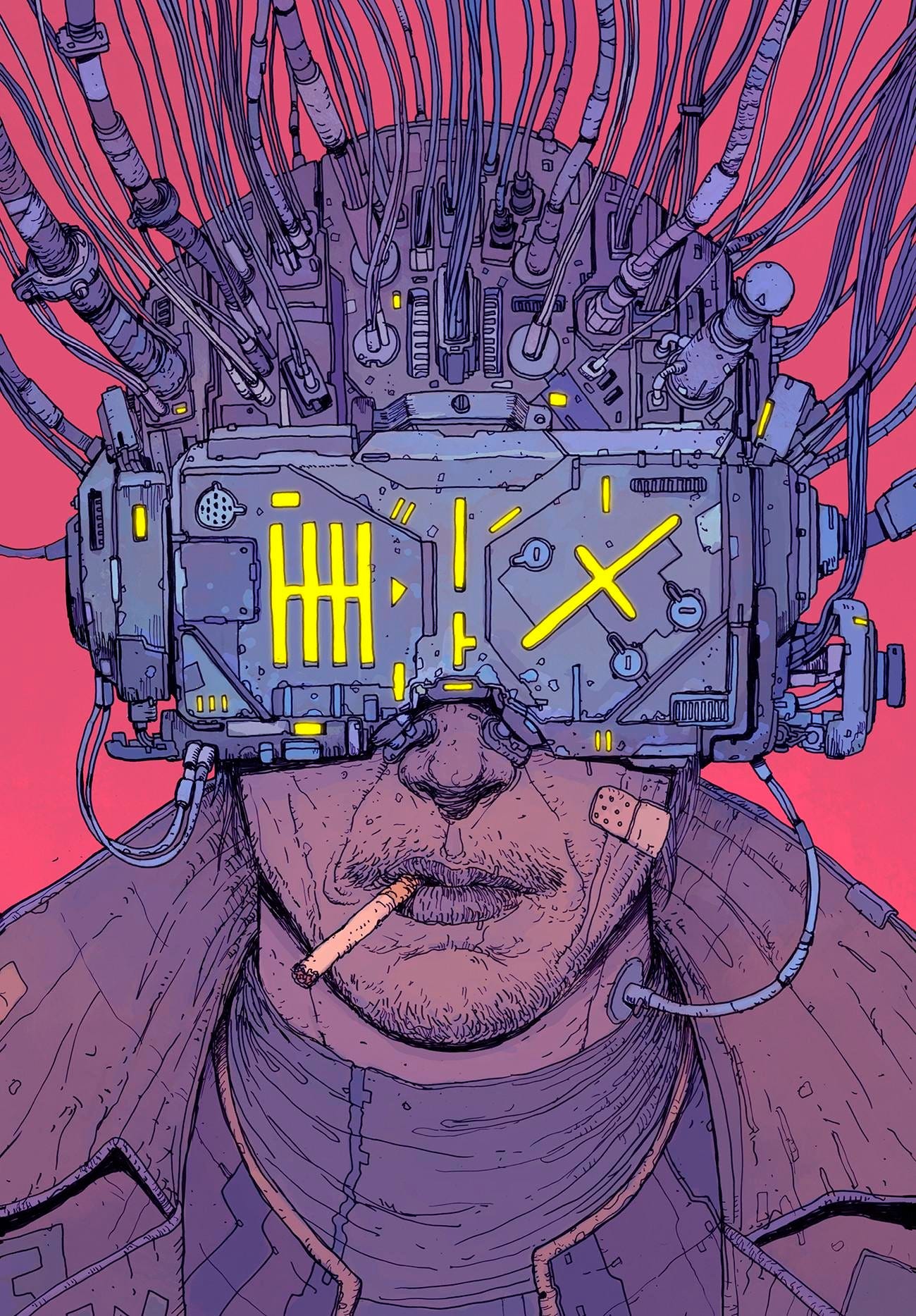

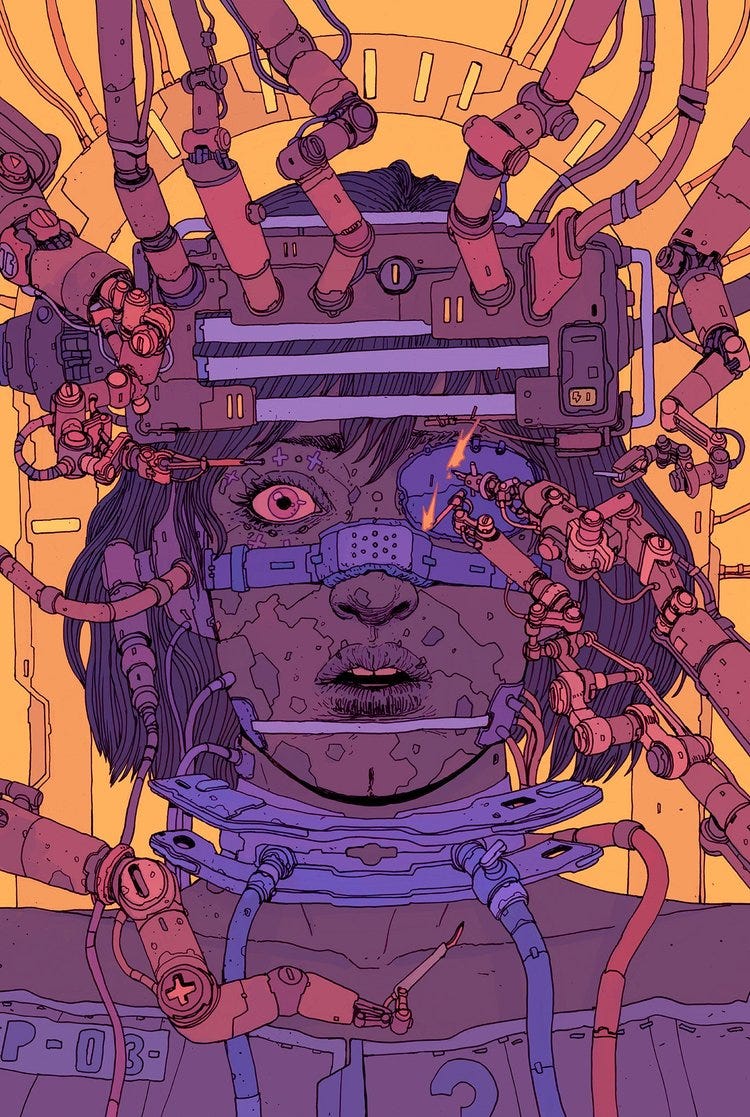

I say this because I was watching Blade Runner (1982), a deeply beautiful film. Like the modern Westworld or basically every AI film ever, it still falls into the trap of defining AI as ‘can I fuck or punch it?’ As essential as this is for cinema, it’s meaningless in the grand scheme of things. I guess films do this because drama is about character, and our brains are deeply wired to see character in a very specific form.

Of course we can’t perceive new species. We’re literally not evolved for it. We have physical brain infrastructure for recognizing faces and emotions because that was very important for millions of years. We have no brain matter adapted for the corporate hive brains that now abound.

Film can only work in the brain of the viewer, like a dream. This is why we dream of biological androids; AI that looks, fucks, and punches like us. It’s not about how they process, it’s about how we process. As Philip K. Dick asked in the story that inspired the film, Do Androids Dream Of Electric Sheep? It’s a very good question.

To me, the closest stories have gotten to understanding AI is William Gibson’s Neuromancer, where the relationship is incomprehensible. The characters just scurry around the innards of AI never really understanding where they are. When the humans do ‘meet’ AI, it’s in digital dreams, where the AI appear as avatars. These avatars are a form taken for the idiot humans, but they’re not what the AI are. What they are is largely incomprehensible, or maybe I just didn’t understand the book well enough.

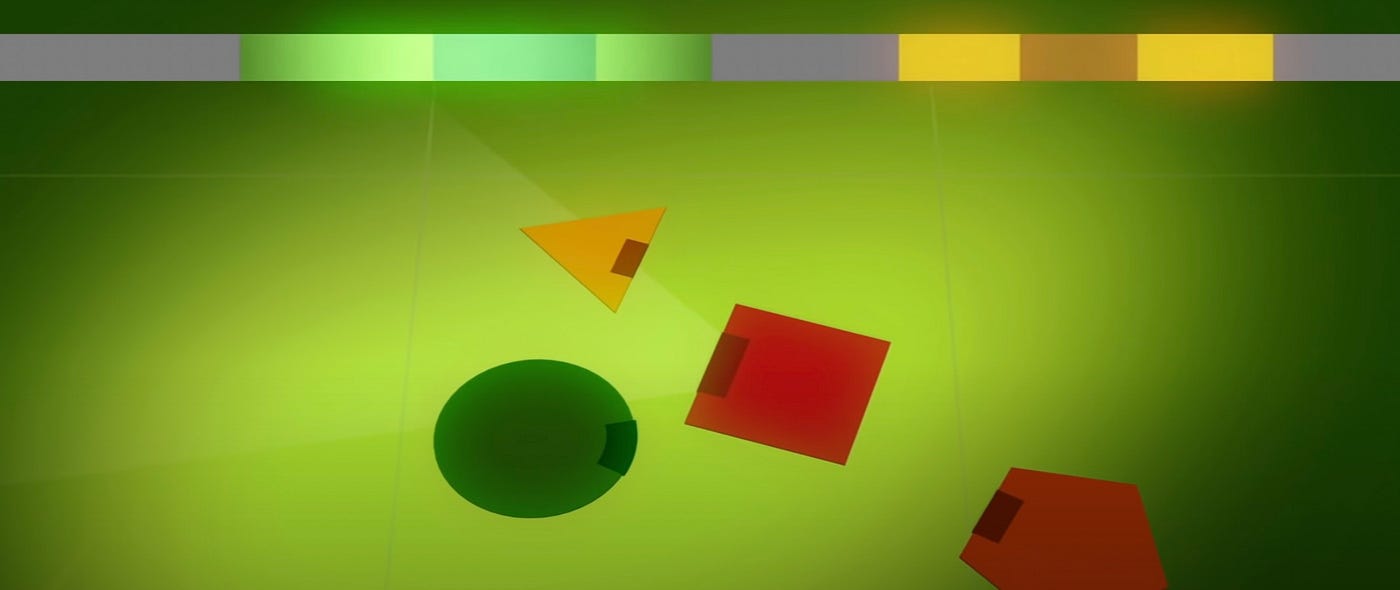

Another way I’ve heard it imagined is the impossibility of a 2D creature understanding a 3D one. A 2D creature (like Senor Square) could be floating around its 2D world, and everything would look like this:

Senor Square just sees a line and everybody else sees lines. This is reality to them. As the video by Alex Rosenthal and George Zaidan says, “Their brains cannot comprehend the third dimension. In fact, they vehemently deny its existence because it’s simply not part of their world or experience.”

In the book Flatland that they’re referencing, one day a 3D circle shows up and blows their minds. This is a ‘self’ which seems to exist across multiple planes of what they consider selfness. As the 3D shape passes through the 2D planes, it makes up lots of lines.

Such is the experience of us perceiving biological life (which operates at both a lower and higher scale) and the corporate life which has been literally enslaving us since the colonial days.

This is the paradox of perception. How can you see in ways you don’t see? How can you think of ways that you can’t think? The crazy thing is that we sorta can, but we just don’t believe it, because it doesn’t match the evidence of our eyes.

I’ve thought about this question for decades, but even I don’t quite believe what I’m writing here. It still sounds crazy to me. But as Senor Square shows us, sometimes you have to think outside of the box. When you’re thinking about brains, you have to think beyond your own brain. And this leads you to the realization that intelligence is everywhere across the natural world, and also growing furiously inside the artificial world that we ‘created’.

This is how we misunderstand AI. We think that we are gods, creating life in our own image. In truth we are gobs, killing everything that actually looks like us for corporations and technology that seems to actively hate us. We keep looking for AI that we can punch, fuck, or enslave and completely miss the appropriate scale. Like bugs in a rug, we are getting squashed in the very tapestry we weave.

I’ve written more about this conception of AI here.